This fascinating post by Jeremiah Grossman is titled “All these vulnerabilities, rarely matter”. I’d highly encourage everyone to read it.

There has been a rampant adoption and agreement that security awareness needs to be shifted to the left.

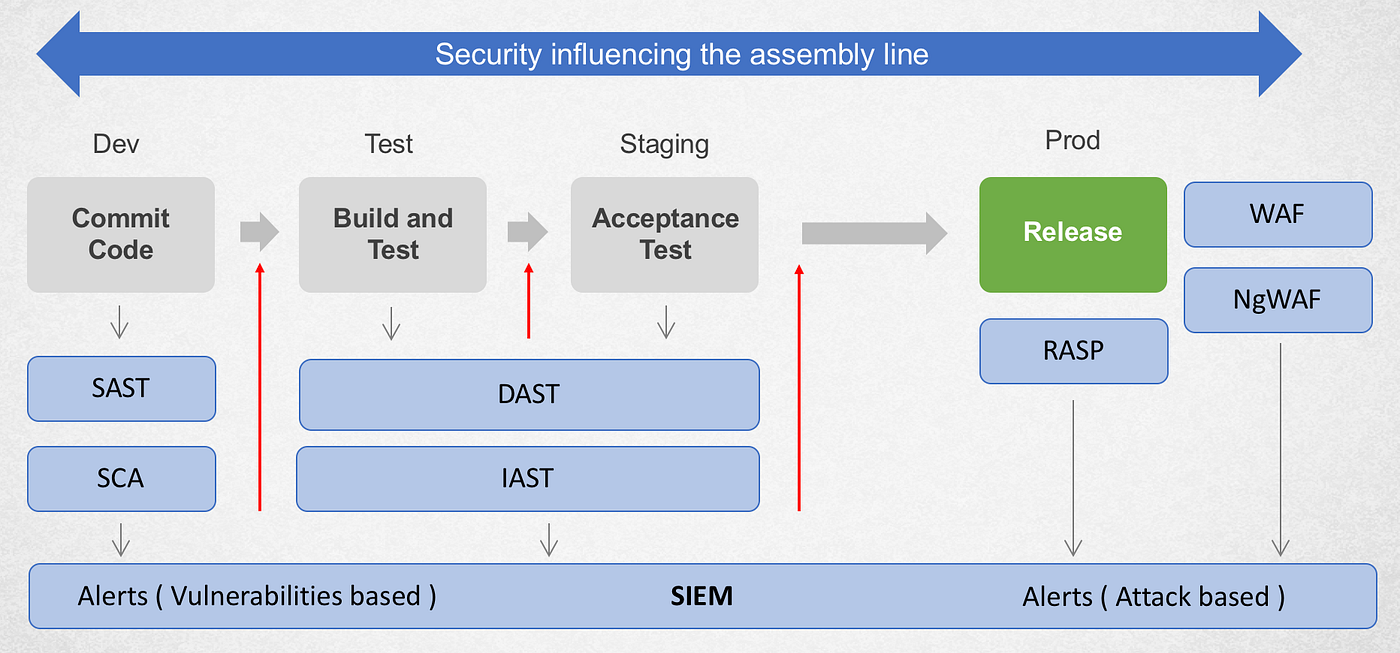

The shift left (verb) paradigm requires measuring the security posture of application in the early phases of its life cycle. With motto of “find and fix vulnerabilities faster”, companies are currently bolting SAST, DAST, IAST and RASP tools in their software supply chain. Given that these tools create alerts in their silos, a Security Information and Event Management (SIEM) tool would need to be deployed to correlate these alerts.

Security Inserted into DevOps Pipeline

In Jeremiah’s post (linked above), few excerpts caught my attention.

The primary purpose of SAST is to find vulnerabilities during the software development process BEFORE they land in production where they’ll eventually be found by DAST and/or exploited by attackers.

If you ask someone who is an expert in both SAST and DAST, specifically those with experience in this area of vulnerability correlation, they’ll tell you the overlap is around 5–15%. Let’s state that more clearly, somewhere between 5–15% of the vulnerabilities reported by SAST are found by DAST

~ excerpt from Jeremiah Grossman’s post

Is the 5–15% overlap conjecture absolute or relative?

Before we speak of instruments to measure, let’s pause and ask ourselves about what we are trying to measure!

Let’s start with vulnerability. What is a vulnerability?

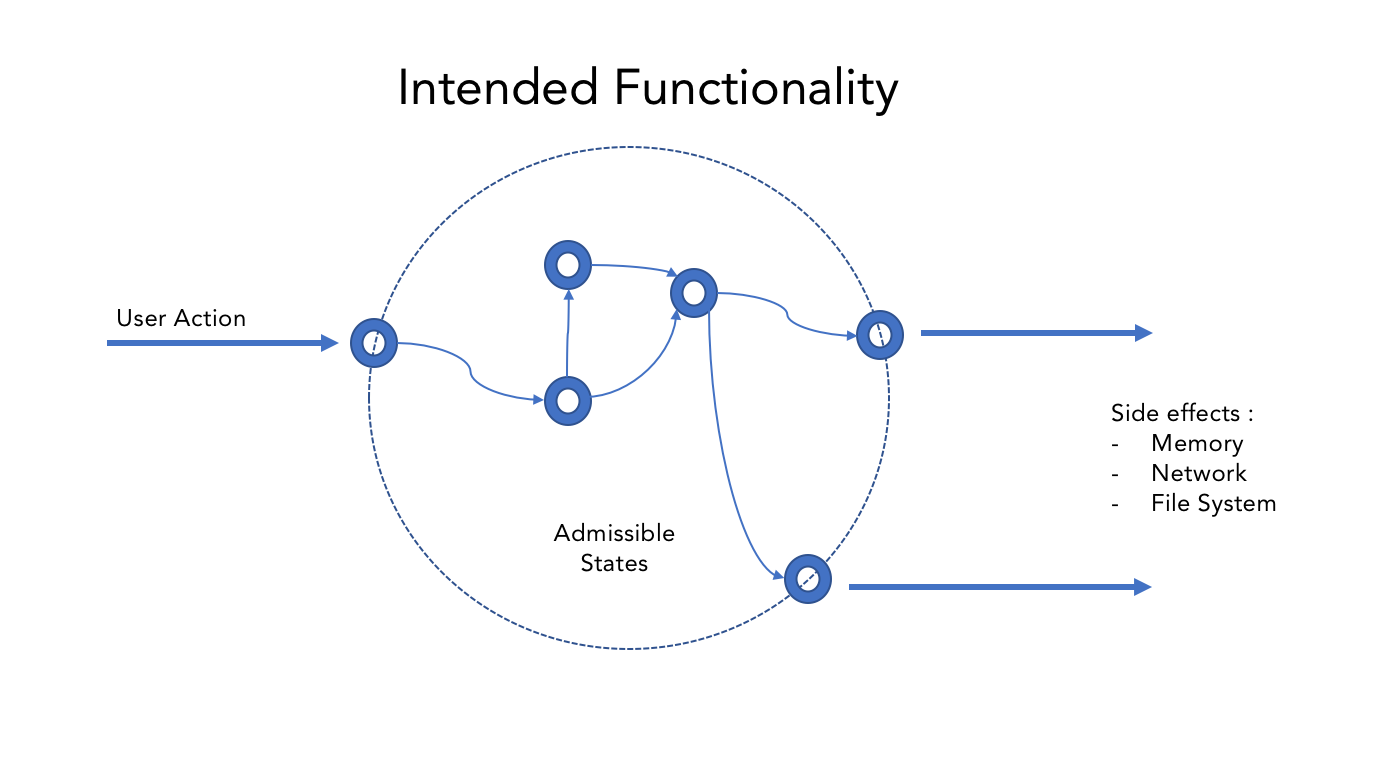

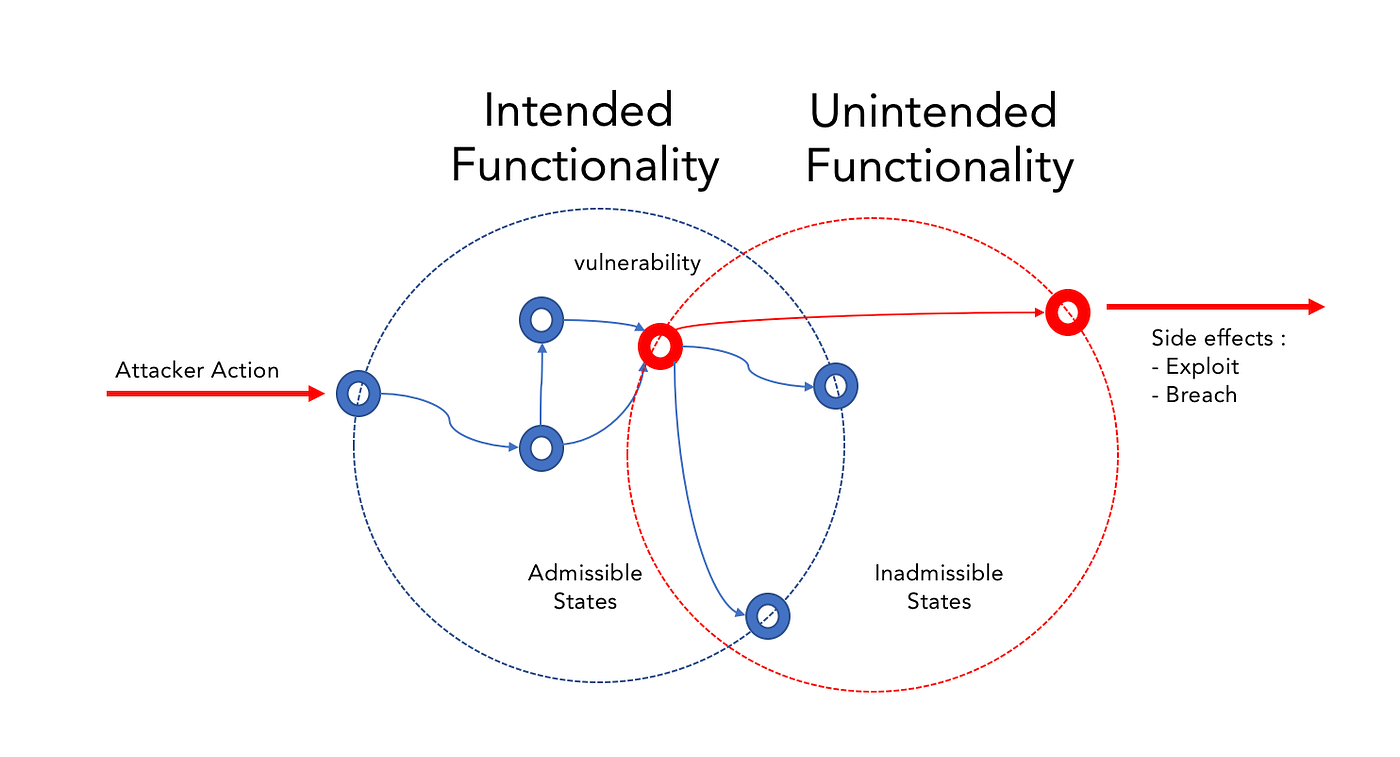

It is an opportunity of using input data to make the application control flow enter a state in that allows the attacker to realize a threat.

Therefore, we can define the security of any application as its ability to always remain within the predetermined set of admissible states that defines its security profile, regardless of the applied input (user action).

In that case, the security analysis problem boils down to checking whether it is impossible for the program to enter any state not allowed by the security profile using an arbitrary input.

In order to further understand admissible states, let’s shift our focus to understanding the security posture of an application, both in

- non-operational (static analysis) state and

- operational (dynamic analysis) state

The Static (Non Operational) Lens

An application can be described as a mathematical abstraction that is equivalent to a Turing machine.

However, in real programs only some code fragments are truly equivalent to a Turning Machine. In terms of computation power, linear bounded automata, stack machines, and finite state machines are below a Turning Machine.

Is it possible to determine all states of a computational process?

In any atypical application (albeit monolithic or a micro-service) there are infinite number of them.

“It is impossible to use a program to classify an algorithm implemented by another program. It is also impossible to use a program to prove that another program doesn’t enter certain states on any inputs”

~ Henry Gordon Rice, 1953

In essence, the whole process of any program’s execution is a sequence of changing states that is determined by the program’s source code.

Now imagine what happens if we try using that analyzer to answer this question: Will the analyzer hang if it tries to analyze itself analyzing itself?

The above is a loose summary of the proof of The Halting Theorem that was formulated by Alan Turing, the founder of the modern theoretical computer science, in 1936. The theorem states that there is no program able to analyze another program and answer the question whether that program would halt on a certain input.

Therefore, we can effectively analyze code fragments that meet the above criteria. In practice it means that:

- We can thoroughly analyze a code fragment without any program loops or recursion, because it is equivalent to a finite state machine;

- We can analyze a code fragment with some program loops or recursion if the exit conditions do not depend on input, by considering it as a finite state machine or a stack machine;

- If the exit conditions for the program loop or recursion depends on input whose length is reasonably limited, in some cases we can analyze the fragment as a system of linear bounded automata or a system of small TMs.

There are several classic approaches to static code analysis, which use different models for producing the properties of the code under examination.

The most primitive and obvious approach is signature search. It is based on looking for occurrences of some template in the syntax code presentation model (which is usually a token flow or an abstract syntax tree).

More-complex approaches use code execution (not presentation or semantic) models. Such models usually can answer this question:

Can a data flow under external control reach such a control flow point that it creates a vulnerability?

We need to consider reachability conditions both for potentially vulnerable control flow points and for combinations of values of the data flows that can reach those points. Based on that information, we can create a system of equations whose set of solutions will give us all possible inputs that are necessary to reach the potentially vulnerable point in the program. The intersection of this set with the set of all possible attack vectors will produce the set of all inputs that bring the program to a vulnerable state.

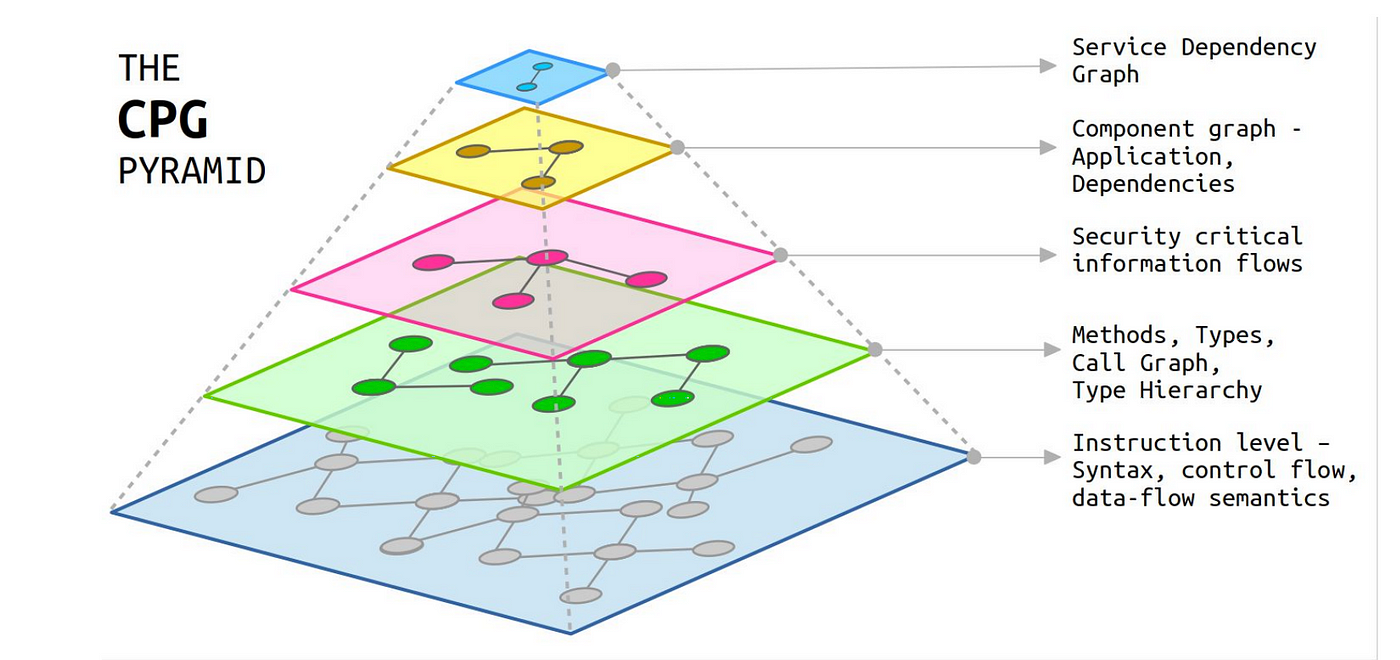

We would thus need a novel approach to address the inherent weaknesses of of illustrated models above. This is where the Semantic Code Property Graph shines.

Semantic Code Property Graph —https://drive.google.com/uc?export=download&id=1VdD-NORXhqg3ywFSQfXvEQSPCiJVuOLS

Code Property graph is a language neutral IR (Intermediary Representation) specifically designed for code querying, with focus on program semantics by abstracting away from the source language. For further details refer to this article.

The Dynamic (Operational) Lens

Unlike the static approach, where program code is analyzed without actually being executed, the dynamic approach (Dynamic Application Security Testing, DAST) requires having a runtime environment and executing the program on some inputs that are most useful for analysis purposes.

Simply speaking, we can call DAST a “method of informed trial and error based on the tests subjected upon it”:

“Let’s feed these input data, which are characteristic for that kind of attack, to the program and see what happens.”

This method has obvious drawbacks: In many cases we can’t quickly deploy the system to be analyzed (sometimes we can’t even build it), the system’s transition to a certain state may be the result of processing the previous inputs, and a comprehensive analysis of a real system’s behavior requires feeding it so many inputs that it is utterly impractical to try testing the system on each of them.

IAST — When SAST meets DAST (the `T` still means Testing)

Not long ago, Interactive Application Security Testing (IAST) — an approach that combined the strengths of SAST and DAST — was considered promising. IAST’s distinctive feature is that the SAST part generates inputs and the templates of expected results, and the DAST part tests the system on these inputs, prompting the human operator to interfere in ambiguous situations. The irony of this approach is that it has inherited both strengths and weaknesses of SAST and DAST, which calls in question its practicality.

Also, testing often is not a practical representation of all conditions a running application is subjected to in a production environment. Most often the effectiveness of testing is directly proportional to the efficacy of the security test suite. Such test suites focus on testing business workflow which at times cannot emulate an attacker attempting to exploit a running application.

Certain organizations create security programs where red teams are designated to emulate behavior and techniques of likely attackers in the most realistic way possible and blue teams are designated to defend from attackers and red teams. Red teams fuzz the application to inquire about it’s attack surface (using feedback and ROP — return oriented programming techniques).

If we really want to push forward our collective understanding of application security and increase the value of our work, we need to completely change the way we think. We need to connect pools of data. Yes, we need to know what vulnerabilities websites currently have — that matter. We need to know what vulnerabilities various application security testing methodologies actually test for. Then we need to overlap this data set with what vulnerabilities attackers predominately find and exploit. And finally, within that data set, which exploited vulnerabilities lead to the largest dollar losses.

~ excerpt from Jeremiah Grossman’s post

RASP — Your Application’s Hazmat suit

Typically RASP is instrumented with an application. When the application bootstraps itself in production, the RASP technique uses dynamic binary instrumentation or Byte-Code instrumentation (BCI) to add new security sensors and analysis capability to the entire application’s surface. This process is very similar to how NewRelic or AppDynamics work to instrument an application for performance.

Upon instrumenting the entire surface, the agent can impose inherent burden upon an application, further impacting both latency and throughput.

These security sensors are tripped on every request in order to evaluate request metadata and other contextual information. If it looks like an attack, the request is tracked through the application. If the attack is causing the application to enter an inadmissible state (inferred from threat landscape or an adaptive learning system), it gets reported as a probe and the attack is blocked.

Harnessing the Power of Connected Feedback Loops

Web application security vulnerabilities such as SSRF (Server Side Request Forgery), Deserialization, SQL injection and XSS (cross-site scripting) attacks now account for the majority of all application security issues. Commonly used solutions such as client-side sanitization, penetration testing, and application firewalls do not provide an adequate solution to these problems

A feedback loop involves four distinct stages. First comes the data: A behavior must be measured, captured, and stored. This is the evidence stage. Second, the information must be relayed to the individual, not in the raw-data form in which it was captured but in a context that makes it emotionally resonant. This is the relevance stage. But even compelling information is useless if we don’t know what to make of it, so we need a third stage: consequence. The information must illuminate one or more paths ahead. And finally, the fourth stage: action. There must be a clear moment when the individual can recalibrate a behavior, make a choice, and act. Then that action is measured, and the feedback loop can run once more, every action stimulating new behaviors that inch us closer to our goals.

~ Thomas Goetz (thomas@wired.com)

How can we establish a continuous feedback loop between static (offense) analysis and dynamic (defense) runtime behavior?

In the static part, the technique builds an estimation model to baseline vulnerable path tracking. Using this model, the dynamic part is baselined. Upon vulnerable paths, applied inputs are observed to verify if its influencing the application to enter an inadmissible state. This reinforcement mechanism helps in correlating a threat to vulnerability and also reduces cognitive load on weeding out false positives generated by these systems operating in isolation.

photo: Life of Pix

.png?width=610&name=J1_ModernCybersecurityBook_Promo%201200x628%20v2@2x%20(1).png)